How to ensure data scalability?

To fully unlock its potential, AI needs access to an ever-growing volume of information, which inevitably leads to an increase in data volume. Therefore, ensuring data scalability is essential. How can you take care of it from the very beginning of an IT project? Here we share our proven methods

When we talk about scaling data, we often think primarily about acquiring more of it. That’s true—data scalability often means expanding the number of sources we collect from. But that’s only one side of the coin. The other side involves what happens next—how we process, analyze, present, and use this data, for example, through AI agents. This is where the need for scalable infrastructure and software comes into play.

Table of contents

- What is data scalability?

- Why is scalability important?

- What is database scalability?

- How does containerization affect scalability?

- Why scalability starts at the application design stage

- Can analytics paralyze a bank? (And how to prevent it through replication)

- How a data warehouse helps reveal what you can’t see in the production system

What is data scalability?

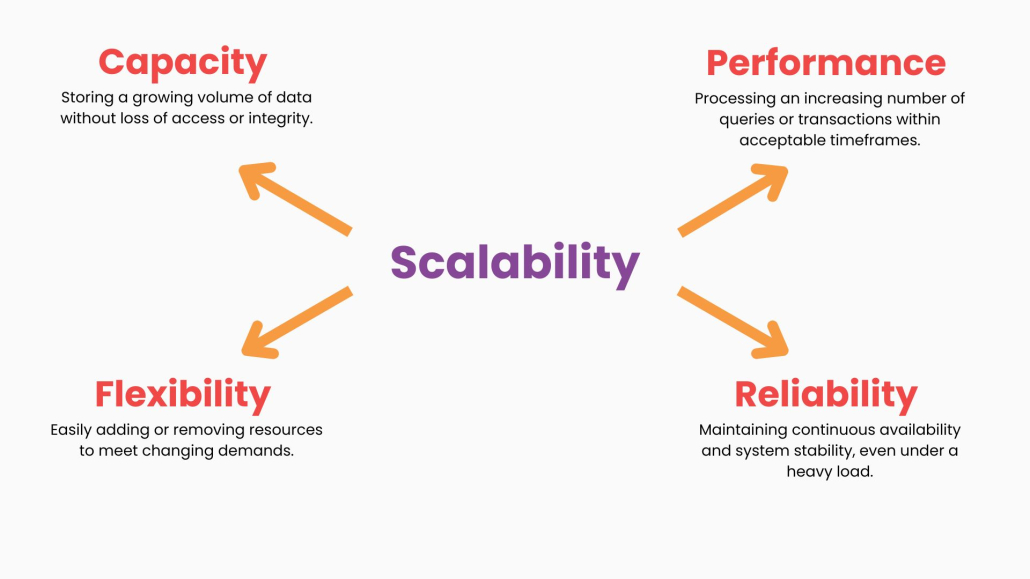

Data scalability is the ability of systems to efficiently manage a growing amount of data while maintaining high performance, without the need for costly and time-consuming infrastructure changes.

Data management plays a key role in ensuring data availability, usability, security, and compliance. But scalability alone isn’t everything. Learn more about preparing your key data for the AI era in our article: How to prepare data for the AI era?

Why is scalability important?

Think of your company’s data as assets that grow along with your business. If your company experiences growth in the number of customers, transactions, or monitored production parameters, the amount of cached data increases dramatically. A robust database infrastructure is essential for achieving this scalability, impacting query performance and system efficiency. Systems that are not scalable will eventually fail to efficiently process and store this information. This can lead to slowdowns, system failures, and consequently,financial losses and loss of customer trust.

Scalability allows for flexible responses to business and market needs. Scalable systems are an investment in a company’s future, opening the door to innovation and competitive advantage. Why is it so crucial?

- It lays the foundation for dynamic growth, enabling the smooth handling of an increasing number of customers and operations.

- It provides a solid base for advanced analytics tools and AI, which drive innovation by delivering valuable insights and enabling process automation.

- It ensures smooth company operations and customer satisfaction by maintaining optimal system performance, even with skyrocketing data volumes.

- Scalable systems offer flexibility, allowing quick adaptation to new resources and changing business and technology requirements—giving companies an edge in a dynamic world.

What is database scalability?

Database scalability refers to the ability of a database to handle increasing workloads and growing data volumes without compromising performance or responsiveness. This is critical for modern applications, as it enables organizations to provide high-performance services to many users.

Vertical scaling and horizontal scaling are two primary strategies for achieving database scalability. Vertical scaling involves increasing the power of a single server, such as adding more CPU, RAM, or storage. While this can be effective, it has limitations and can become costly. Horizontal scaling, on the other hand, involves adding more servers to distribute the workload across multiple nodes. This approach offers greater flexibility and can handle larger data volumes more efficiently.

Containerization: the key to horizontal scaling

We are currently developing an application that scans job advertisement portals and creates statistics: how many job offers appear in each region, city, and industry. Initially, we scan 2–3 portals, which already brings value to our client. But if we start scanning 10 or 20 sources, we’ll gain an incomparably broader view of the market. This is data scaling: expanding sources while aggregating and analyzing data of a similar nature.

Data acquisition is just the beginning

The more data we collect, the greater the possibilities for analysis and matching results. However, this also means we must handle the growing volume effectively. Say we used to search for two interesting job offers among 100,000 listings, and now we have a million, our tool must be ready to sift through a much larger “haystack” to find the “needle.” In other words, processing larger amounts of data is a key aspect of scaling.

Scaling data forces database scalability

As the number of sources and data volume grows, we must also provide adequate hardware resources and tools. If one application can scan one portal at a time, we will need many parallel instances to scan 20 portals. Otherwise, the database will be overloaded, and the process will take too long.

This is where containerization and orchestration come in. Containers allow us to “package” our application into a lightweight, portable unit—like a virtual computer that contains everything it needs to run. Once one container works correctly, we can simply duplicate it—like copy-pasting a file—without reinstalling everything from scratch. This makes scaling additional instances fast, inexpensive, and almost immediate. If containers are run in the cloud on serverless platforms (such as AWS Fargate, Google Cloud Run, or Azure Container Instances), users pay only for the computing power they consume, allowing for optimal resource utilization.

When we add a container management system—so-called orchestration—we can automate the entire process, running any number of instances in parallel. This is what automatic infrastructure scaling looks like: growing resources at a pace that matches our data processing needs.

Everything connects in the end

We scale data—we collect more of it. But for it to make sense, we must also scale the systems that process it. More data means more value but also more challenges. Only the combination of scalable data, infrastructure, and applications delivers real results: fast, accurate, and valuable data processing that meets real user needs.

Application scaling begins at the design stage

A common mistake in early-stage app development is focusing solely on a single, specific use case. Carefully managing schema changes and modifications within distributed databases is crucial. Updating and validating database structures helps minimize disruption to ongoing operations and adapt to evolving business requirements. While this approach might work in the short term, it often leads to endless patching and feature additions, making the system hard to maintain over time.

The right approach, practiced by professional service providers, is the opposite. Instead of focusing on just one “use case,” it’s better to consider at least a dozen scenarios during the design phase. This way, the data model and system architecture will be more flexible and ready for future extensions—without costly overhauls.

It’s like building a house. If you start with just one room without planning the whole structure, it may later be problematic (and expensive) to connect water to the kitchen or bathroom. Likewise, if your initial data model didn’t include, say, an employer’s address, adding it later could require reprocessing all previous records—wasting time and resources.

The more such modifications pile up, the more time is spent catching up with previous decisions rather than developing new features. Eventually, data processing becomes an end in itself rather than a means to achieve business goals.

Thus, the key to scalability is not just technology or infrastructure—it’s mindset: thinking broadly, flexibly, and with future needs in mind. In the data and AI era, this approach is a necessity, not a luxury.

Database replication in banking

Banking is a classic example of large-scale data exchanges. Even a small bank in Poland can have massive customer databases. One million clients mean at least a million entries in customer tables—each typically with multiple accounts: credit cards, debit cards, savings—quickly translating to millions of records. And that’s just the beginning—there are statements, blocks, confirmations, and other data generated monthly.

The banking system must not only store this data but also handle real-time operations: authorizing payments, calculating interest, posting transactions—often tens of thousands per second. At the same time, it must answer analytical queries like “review 10 million transactions and find patterns.” Under such a load, the system can choke.

This is why real-time database replication is used: creating a faithful copy of the data on another server. The primary database handles real-time operations, while the replicated one handles reports and analytics. This way, analytics do not slow down the production system, and the data is “fresh.”

This solution has become necessary because transaction volumes grow every year. Where credit cards were once rare, today we pay with cards, BLIK, and smartphones. Business and technologies change—and systems must keep up. Scalability is not just about more data—it’s about flexibility, task division, and readiness for growth.

Distributing data across multiple servers enhances performance, resilience, and fault tolerance, which is crucial for managing increasing data volumes and concurrent users.

Data Warehousing: Tracking changes you can’t see at first glance

Data warehousing works similarly to replication, but not in real time. Data typically flows into a warehouse once a day or at scheduled intervals. This is sufficient because a data warehouse serves a different type of analysis than a production system. It’s not about speed—it’s about tracking changes over time.

Example? Card status—active or inactive. In a production system, you only see the current status. Today the card is active. Tomorrow—it’s still active. The day after—also active. Then suddenly it’s inactive. But when exactly did it change? The production system won’t tell you—it doesn’t store a history of changes.

This is where the data warehouse steps in. If it records the status daily, it builds a historical record. Day by day: active, active, active… then inactive, inactive… and active again. Thanks to this, you can easily determine that the card was inactive for two days and when exactly it happened. This analysis isn’t possible with production data alone.

It’s the same with account balances. Without historical data, you only see the current balance. In an hour, it could change. Tomorrow it will change again. Next week—different again. But if you want to know your average monthly balance—you need to know your account history. . With daily balance snapshots stored in a warehouse, it’s easy: sum up all values and divide by the number of days.

This shows that a data warehouse lets you scale “over time”—adding a time dimension to seemingly unchanging data. You’re not adding new data—you’re adding new insights. Data plus time gives a completely different quality.

It’s a form of scaling — not by volume, rather by analytical depth.

Summary

Scalable data means scalable business! Ensuring data scalability is fundamental to building modern, future-ready IT infrastructure. It allows companies to effectively manage growing volumes of information, support data-driven innovations like AI, and maintain a competitive edge. Containerization and orchestration help with data scaling. In banking, replication and data warehousing are commonly used. Scaling resources effectively balances performance and cost, especially when dealing with fluctuating workloads.

Investing in data scalability is investing in your company’s future. Want to ensure the quality and security of your data? Book a meeting with Jacek!